Future Now: Detailed AI and Tech Developments

AI Pioneers Win Nobel Prize: Triumph and Warnings

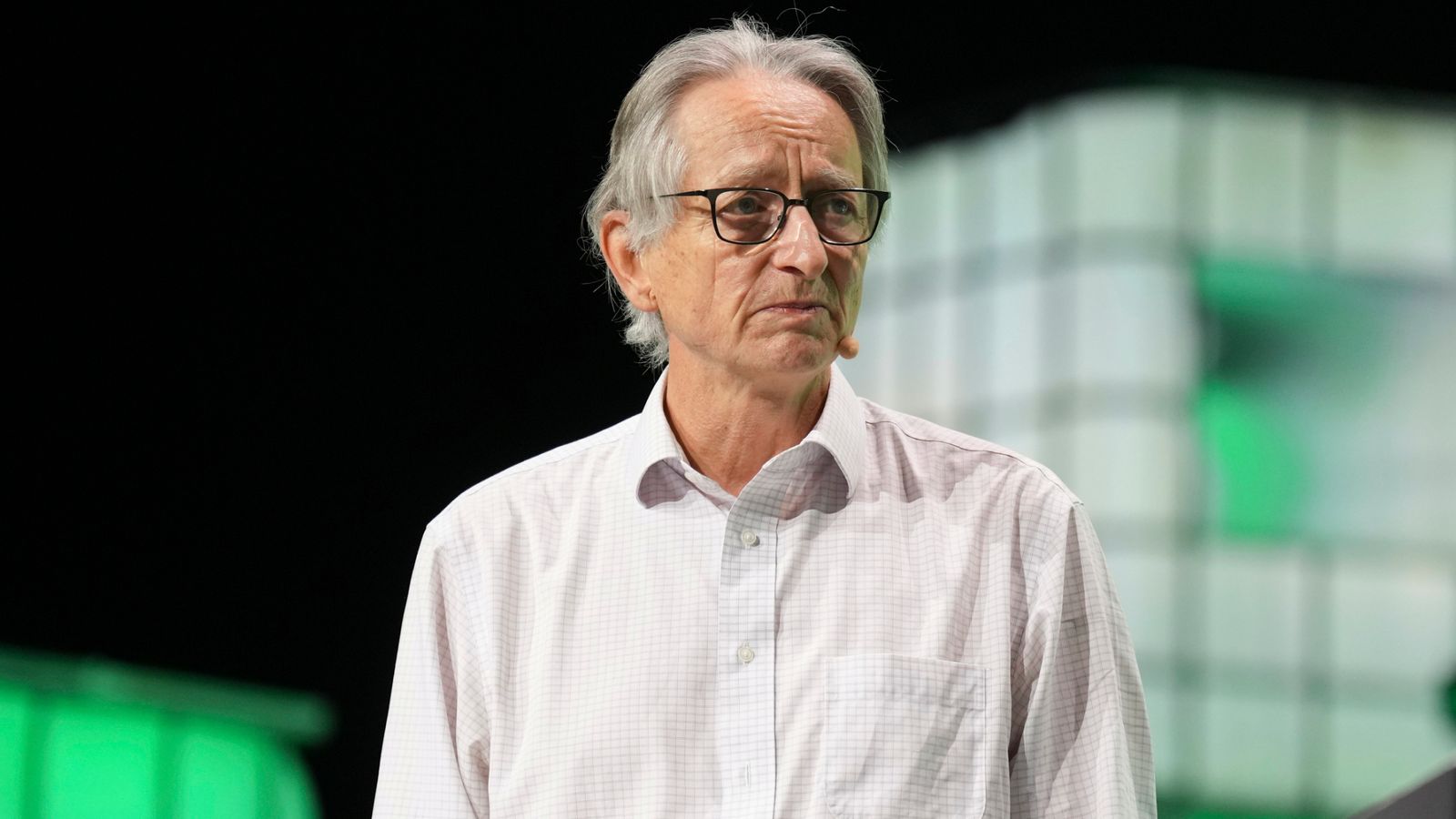

The news was published on Tuesday, October 8th, 2024. I'm Mike. So get this. The world of science just got a major shake up, and it's all about those brainy machines we've been hearing so much about. You know, the ones that can beat us at chess, write poems, and maybe even take over the world one day? Yeah, those. Well, turns out the guys who helped kick off this whole AI revolution are finally getting their due. John Hopfield and Jeffrey Hinton, two brilliant minds who have been tinkering with artificial neural networks for decades, just bagged the 2024 Nobel Prize in physics. Now, I know what you're thinking. Mike, what the heck is an artificial neural network? Well, imagine your brain, but instead of squishy gray matter, it's all wires and code. These networks are basically the secret sauce behind a lot of the AI magic we see today. These two geniuses spent years figuring out how to make machines learn like our brains do. It's not just about cramming information into a computer. It's about teaching it to think, to adapt, to get smarter on its own. And let me tell you, that's no small feat. It's like teaching a toaster to appreciate fine art. But somehow they cracked it, and now we've got AI that can recognize faces, translate languages, and even create art. But here's where it gets really interesting. Jeffrey Hinton, this guy they're calling the godfather of AI. And let's be honest, that's a pretty cool nickname. He's not just basking in the glow of his success. No, he's actually got some serious concerns about the very technology he helped create. I mean, talk about a plot twist, right? Just last year, Hinton up and quit his job at Google. Now, when a top expert in AI decides to jump ship from one of the biggest tech companies in the world, you know, something's up. He's been warning us about the potential dangers of AI, saying stuff like, it might be hard to stop bad guys from using it for nefarious purposes. It's like he's opened Pandora's box and is now trying to slam it shut before all hell breaks loose. And get this. He even said he sometimes regrets his life's work. Can you imagine? Spending decades on something, revolutionizing an entire field, and then wondering if you've made a huge mistake? It's like inventing the world's best chocolate cake and then realizing it might give everyone diabetes. Talk about a moral dilemma. Now, let's rewind the clock a bit and talk about some major milestones in AI's journey to becoming the talk of the town. Remember 1997? Spice Girls were dominating the charts. Titanic was making everyone cry in theaters. And oh yeah, a computer beat the world chess champion. That's right, IBM's Deep Blue and head to head with Gary Kasparov, the Russian Grandmaster, who'd been crushing it in the chess world for years. Picture this, a room filled with tension, cameras rolling, and a computer facing off against one of the greatest human minds in chess. It was like something out of a sci-fi movie, except it was happening in real life. Deep Blue wasn't just any old machine. It was a super computer, specifically designed to play chess, capable of evaluating 200 million positions per second. Talk about processing power. The match was a roller coaster. Kasparov won the first game, and everyone thought, ah, human still got it. But then Deep Blue came back swinging, winning the second game. The next three games ended in draws, and it all came down to the final showdown. In a stunning turn of events, Deep Blue clinched the victory, becoming the first computer to defeat a reigning world champion in a classical chess match. This wasn't just a win for IBM or a loss for Kasparov. It was a watershed moment for artificial intelligence. It proved that machines could outperform humans in specific complex tasks that were once thought to be the exclusive domain of human intellect. Chess, after all, isn't just about moving pieces around a board. It requires strategy, foresight, and a kind of intuition that many believed only humans could possess. But hold your horses, because AI wasn't done showing off yet. Fast forward to 2011, and we've got another IBM brainchild stealing the spotlight. This time it's Watson, and it's not here to play chess. It's here to play Jeopardy. Now, if you've never watched Jeopardy, imagine a quiz show where the answers are given first, and contestants have to come up with the right questions. It's not just about knowing facts. It's about understanding context, wordplay, and nuanced language. Watson wasn't just any old computer program. This bad boy was designed to understand natural language, process vast amounts of information, and come up with answers faster than you can say, Alex Trebek. It had access to 200 million pages of structured and unstructured content, including the full text of Wikipedia. But here's the kicker. It wasn't connected to the internet during the game. All that knowledge was stored locally. It's like having a library the size of the Library of Congress in your pocket, but being able to find exactly what you need in microseconds. The showdown was epic. Watson faced off against Ken Jennings and Brad Rutter, two of the most successful human contestants in Jeopardy. History. These guys were no slouches. Jennings had a 74-game winning streak, and Rutter had never lost to a human opponent. But Watson mopped the floor with them. Over the course of two games, Watson racked up 77 Vatamatliche $147, leaving Jennings with $24,000, and Rutter with $21,600. Now, this Watson victory wasn't just a fun little game show moment. It was a huge deal in the world of AI. It showed that machines could understand and process natural language in a way that was genuinely competitive with humans. And that's got some pretty big implications for the future. Looking ahead, we're likely to see AI systems becoming even more deeply integrated into scientific research. I'm talking about AI that's not just crunching numbers, but actually helping to design experiments and interpret results. Imagine an AI system that can sift through millions of potential drug compounds and identify the most promising candidates for treating a disease, or picture an AI that can analyze complex climate models and spot patterns that human scientists might miss. It's like having a super smart lab assistant who never sleeps and can process information at lightning speed. But here's the thing. With great power comes great responsibility, right? And that's where the concerns of folks like Jeffrey Hinton come into play. We might see a real push for more stringent regulations on AI development and deployment. It's not just about making sure AI doesn't go all skynet on us. It's about addressing more subtle issues too. Like how do we make sure AI systems aren't perpetuating biases? How do we ensure they're making decisions in a way that's transparent and accountable? It's like trying to child proof a house except the child is potentially smarter than us and can learn at an exponential rate. This could lead to a surge in what we call ethical AI initiatives. Think of it as a kind of AI charm school where we teach these systems not just how to be smart, but how to be good. We're talking about developing AI that's not just powerful, but also transparent, fair, and aligned with human values. It's like trying to raise a well-behaved genius child who also happens to be able to process terabytes of data in seconds. The tricky part is we're an uncharted territory here. We're creating something that could potentially outthink us and we need to figure out how to do that safely. It's a bit like teaching a teenager to drive except the car can fly, teleport, and make decisions on its own. We need to establish some serious ground rules and safeguards. But here's the silver lining. All this concern and caution could actually lead to better, more responsible AI development. We might see more collaboration between AI researchers, ethicists, and policymakers. We could end up with AI systems that are not just incredibly powerful, but also trustworthy and beneficial to humanity. It's an exciting and slightly nerve-wracking time in the world of AI. We're standing on the brink of some truly revolutionary advancements, but we're also grappling with some pretty hefty ethical questions. It's like we've invented fire, and now we need to figure out how to use it without burning down the forest. The next few years are gonna be crucial in shaping how we develop and deploy AI technologies. And let me tell you, as someone who's been following this field for years, I can't wait to see what happens next. The news was brought to you by Listen2. This is Mike, signing off and reminding you to stay curious, stay informed, and maybe start brushing up on your trivia skills. Who knows, you might need to go up against an AI on Jeopardy, someday.